Contents

Introduction

This cycle of the crypto bull market has been the most uninspiring in terms of commercial innovation. Unlike the previous bull market, which saw phenomenal trends like DeFi, NFTs, and GameFi, this cycle lacks significant industry hotspots. Consequently, there has been sluggish growth in user base, industry investment, and developer activity.

This trend is also evident in the price of crypto assets. Over the entire cycle, most altcoins, including ETH, have consistently lost value relative to BTC. The valuation of smart contract platforms is largely driven by the prosperity of their applications. When innovation in application development stagnates, it becomes challenging for the valuation of public chains to rise.

However, artificial intelligence (AI), as a relatively new sector in the crypto business landscape, could benefit from the explosive growth and ongoing hotspots in the broader commercial world. This gives AI projects within the crypto space the potential to attract significant incremental attention.

In the IO.NET report published by Mint Ventures in April, the necessity of integrating AI with crypto was thoroughly analyzed. The advantages of crypto-economic solutions—such as determinism, efficient resource allocation, and trustlessness—could potentially address the three major challenges of AI: randomness, resource intensity, and the difficulty in distinguishing between human and machine.

In the AI sector of the crypto economy, I want to discuss and explore several critical issues in this article, including:

- Emerging or potentially explosive narratives exist in the crypto AI sector.

- The catalytic paths and logical frameworks of these narratives.

- Crypto + AI Projects.

- The risks and uncertainties involved in the development of the crypto + AI sector.

Please note that this article reflects my current thinking and may evolve. The opinions here are subjective and there may be errors in facts, data, and logical reasoning. This is not financial advice, but feedback and discussions are welcomed.

The Next Wave of Narratives in the Crypto AI Sector

Before diving into the emerging trends in the crypto AI sector, let’s first examine the current leading narratives. Based on market cap, those with a valuation exceeding $1 billion include:

- Computing Power

- Render Network ($RNDR): holding circulating market cap of $3.85 billion,

- Akash: with a circulating market cap of $1.2 billion

- IO.NET: recently valued at $1 billion in its latest financing round.

- Algorithm Networks

- Bittensor ($TAO): Boast a circulating market cap of $2.97 billion.

- AI Agents

- Fetch.ai ($FET): reaches a pre-merger circulating market cap of $2.1 billion

*Data Updated Time: May 24, 2024.

Beyond the fields mentioned above, which AI sector will produce the next project with a market cap exceeding $1 billion?

I believe this can be speculated from two perspectives: the “industrial supply side” narrative and the “GPT moment” narrative.

Examining The Opportunities in The Energy and Data Field from The Perspective of Industrial Supply Side

From the perspective of the industrial supply side, four key driving forces behind AI development are:

- Algorithms: High-quality algorithms can execute training and inference tasks more efficiently.

- Computing Power: Both model training and inference demand substantial computing power provided by GPU hardware. This requirement represents a major industrial bottleneck, with the current chip shortage driving up prices for mid-to-high-end chips.

- Energy: AI data centers require significant energy consumption. Beyond the electricity needed for GPUs to perform computational tasks, substantial energy is also needed for cooling the GPUs. In large data centers, cooling systems alone account for about 40% of total energy consumption.

- Data: Enhancing the performance of large models necessitates expanding training parameters, leading to a massive demand for high-quality data.

Regarding the four industrial driving forces mentioned above, the algorithm and computing power sectors already have crypto projects with circulating market cap exceeding $1 billion. However, the energy and data sectors have yet to see projects reach similar market caps.

Actually, shortages in the supply of energy and data may soon emerge, potentially becoming the next industry hotspots and driving the surge of related projects in the crypto space.

Let’s begin with energy part.

On February 29, 2024, Elon Musk remarked at the Bosch ConnectedWorld 2024 conference, “I predicted the chip shortage more than a year ago, and the next shortage will be electricity. I think next year you will see that they just can’t find enough electricity to run all the chips.”

According to specific data, the Stanford University Institute for Human-Centered Artificial Intelligence, led by Fei-Fei Li, publishes the “AI Index Report” annually. In their 2022 report on the AI industry for 2021, the research group estimated that AI energy consumption that year accounted for only 0.9% of global electricity demand, putting limited pressure on energy and the environment. However, in 2023, the International Energy Agency (IEA) summarized 2022 by stating that global data centers consumed approximately 460 terawatt-hours (TWh) of electricity, accounting for 2% of global electricity demand. They also predicted that by 2026, global data center energy consumption will be at least 620 TWh, potentially reaching up to 1050 TWh.

In reality, the International Energy Agency’s estimates remain conservative, as numerous AI projects poised to launch will demand significantly more energy than anticipated in 2023.

For example, Microsoft and OpenAI are planning the Stargate project. This ambitious initiative is set to commence in 2028 and be completed around 2030. The project aims to build a supercomputer equipped with millions of dedicated AI chips, providing OpenAI with unprecedented computing power to advance its research in artificial intelligence, particularly large language models. The estimated cost of this project exceeds $100 billion, which is 100 times the cost of current large data centers.

The energy consumption for the Stargate project alone is expected to reach 50 TWh.

As a result, OpenAI’s founder Sam Altman stated at the Davos Forum this January: “Future artificial intelligence will require energy breakthroughs, as the electricity consumed by AI will far exceed expectations.”

Following computing power and energy, the next major shortage in the rapidly growing AI industry is likely to be data.

In fact, the shortage of high-quality data necessary for AI has already become a reality.

Through the ongoing evolution of GPT, we have largely grasped the pattern of enhancing the capabilities of large language models—by expanding model parameters and training data, the capabilities of these models can be exponentially increased. This process shows no immediate technical bottleneck.

However, high-quality and publicly available data are likely to become increasingly scarce in the future. AI products may face supply-demand conflicts similar to those experienced with chips and energy.

Firstly, there is an increase in disputes over data ownership.

On December 27, 2023, The New York Times filed a lawsuit against OpenAI and Microsoft in the U.S. District Court, alleging that they used millions of its articles without permission to train the GPT model. The New York Times is seeking billions of dollars in statutory and actual damages for the “illegal copying and use of uniquely valuable works” and is demanding the destruction of all models and training data that include its copyrighted materials.

At the end of March 2024, The New York Times issued a new statement, expanding its accusations beyond OpenAI to include Google and Meta. The statement claimed that OpenAI had used a speech recognition tool called Whisper to transcribe a large number of YouTube videos into text, which was then used to train GPT-4. The New York Times argued that it has become common practice for large companies to employ underhanded tactics in training their AI models. They also pointed out that Google is engaged in similar practices, converting YouTube video content into text for their model training, essentially infringing on the rights of video content creators.

The lawsuit between The New York Times and OpenAI, dubbed the first “AI copyright case,” is unlikely to be resolved quickly due to its complexity and the profound impact it could have on the future of content and the AI industry. One potential outcome is an out-of-court settlement, with deep-pocketed Microsoft and OpenAI paying a significant amount in compensation. However, future disputes over data copyright will inevitably drive up the overall cost of high-quality data.

Furthermore, Google, as the world’s largest search engine, has been reported to be considering charging fees for its search services—not for the general public, but for AI companies.

Google’s search engine servers hold vast amounts of content—essentially, all the content that has appeared on web pages since the 21st century. AI-driven search products, such as Perplexity and Kimi and Meta Sota developed by Chinese companies, process the data retrieved from these searches through AI and then deliver it to users. Introducing charges for AI companies to access search engine data will undoubtedly raise the cost of obtaining data.

Furthermore, AI giants are not just focusing on public data; they are also targeting non-public internal data.

Photobucket, a long-established image and video hosting website, once boasted 70 million users and nearly half of the U.S. online photo market share in the early 2000s. However, with the rise of social media, Photobucket’s user base has significantly dwindled, now standing at only 2 million active users, each paying a steep annual fee of $399. According to its user agreement and privacy policy, accounts inactive for more than a year are reclaimed, granting Photobucket the right to use the uploaded images and videos. Photobucket’s CEO, Ted Leonard, disclosed that their 1.3 billion photos and videos are extremely valuable for training generative AI models. He is currently negotiating with several tech companies to sell this data, with prices ranging from 5 cents to 1 dollar per photo and more than 1 dollar per video. Leonard estimates that Photobucket’s data could be worth over 1 billion dollars.

The research team EPOCH, which specializes in AI development trends, published a report titled “Will we run out of data? An analysis of the limits of scaling datasets in Machine Learning.” This report, based on the 2022 usage of data in machine learning and the generation of new data, while also considering the growth of computing resources, concluded that high-quality text data could be exhausted between February 2023 and 2026, and image data might run out between 2030 and 2060. Without significant improvements in data utilization efficiency or the emergence of new data sources, the current trend of large machine learning models that depend on massive datasets could slow down.

Considering the current trend of AI giants buying data at high prices, it seems that free, high-quality text data has indeed run dry, validating EPOCH’s prediction from two years ago.

Concurrently, solutions to the “AI data shortage” are emerging, specifically AI-data-as-a-service.

Defined.ai is one such company that offers customized, high-quality real data for AI companies.

The business model of Defined.ai works as follows: AI companies specify their data requirements, such as needing images with a certain resolution quality, free from blurriness and overexposure, and with authentic content. Companies can also request specific themes based on their training tasks, like nighttime photos of traffic cones, parking lots, and signposts to enhance AI’s night scene recognition. The public can accept these tasks, upload their photos, which are then reviewed by Defined.ai. Approved images are paid for, typically $1-2 per high-quality image, $5-7 per short video clip, and $100-300 for a high-quality video over 10 minutes. Text is compensated at $1 per thousand words, with task completers earning about 20% of the fees. This approach to data provision could become a new crowdsourcing business akin to “data labeling.”

Global task distribution, economic incentives, data asset pricing, circulation, and privacy protection, with everyone able to participate, sound very much like a business model suited to the Web3 paradigm.

Analyzing The Crypto + AI Projects from The Perspective of Industrial Supply

The attention generated by the chip shortage has extended into the crypto industry, positioning decentralized computing power as the most popular and highest-valued AI sector to date.

If the supply and demand conflicts in the AI industry for energy and data become acute in the next 1-2 years, what narrative-related projects are currently present in the crypto industry?

Let’s start with energy-concept projects.

Currently, energy projects listed on major centralized exchanges (CEX) are very scarce, with Power Ledger and its native token $POWR being the sole example.

Power Ledger was established in 2017 as a blockchain-based comprehensive energy platform aimed at decentralizing energy trading. It promotes direct electricity trading among individuals and communities, supports the widespread adoption of renewable energy, and ensures transaction transparency and efficiency through smart contracts. Initially, Power Ledger operated on a consortium chain adapted from Ethereum. In the second half of 2023, Power Ledger updated its whitepaper and launched its own comprehensive public chain, based on Solana’s technical framework, to handle high-frequency microtransactions in the distributed energy market. Power Ledger’s primary business areas currently include:

- Energy Trading: Enabling users to buy and sell electricity directly in a peer-to-peer way, particularly from renewable sources.

- Environmental Product Trading: Facilitating the trading of carbon credits and renewable energy certificates, as well as financing based on environmental products.

- Public Chain Operations: Attracting application developers to build on the Power Ledger blockchain, with transaction fees paid in $POWR tokens.

The current circulating market cap of the Power Ledger is $170 million, with a fully diluted market cap of $320 million.

In comparison to energy-concept crypto projects, there is a richer variety of targets in the data sector.

Listed below are the data sector projects I am currently following, which have been listed on at least one major CEX, such as Binance, OKX, or Coinbase, arranged by fully diluted valuation (FDV) from low to high:

1. Streamr ($DATA)

Streamr’s value proposition is to build a decentralized real-time data network where users can freely trade and share data while retaining full control over their own information. Through its data marketplace, Streamr aims to enable data producers to sell data streams directly to interested consumers, eliminating the need for intermediaries, thus reducing costs and increasing efficiency.

In real-world applications, Streamr has collaborated with another Web3 vehicle hardware project, DIMO, to collect data such as temperature and air pressure through DIMO hardware sensors installed in vehicles. This data is then transmitted as weather data streams to organizations that need it.

Unlike other data projects, Streamr focuses more on IoT and hardware sensor data. Besides the DIMO vehicle data, other notable projects include real-time traffic data streams in Helsinki. Consequently, Streamr’s token, $DATA, experienced a significant surge, doubling its value in a single day during the peak of the Depin concept last December.

Currently, Streamr’s circulating market cap is $44 million, with a fully diluted market cap of $58 million.

2. Covalent ($CQT)

Unlike other data projects, Covalent focuses on providing blockchain data. The Covalent network reads data from blockchain nodes via RPC, processes and organizes it, and creates an efficient query database. This allows Covalent users to quickly retrieve the information they need without performing complex queries directly on blockchain nodes. Such services are referred to as “blockchain data indexing.”

Covalent primarily serves enterprise customers, including various DeFi protocols, and many centralized crypto companies such as Consensys (the parent company of MetaMask), CoinGecko (a well-known crypto asset tracking site), Rotki (a tax tool), and Rainbow (a crypto wallet). Additionally, traditional financial industry giants like Fidelity and Ernst & Young are also among Covalent’s clients. According to Covalent’s official disclosures, the project’s revenue from data services has already surpassed that of the leading project in the same field, The Graph.

The Web3 industry, with its integrated, transparent, authentic, and real-time on-chain data, is poised to become a high-quality data source for specialized AI scenarios and specific “small AI models.” Covalent, as a data provider, has already started offering data for various AI scenarios and has introduced verifiable structured data tailored for AI applications.

For instance, Covalent provides data for the on-chain smart trading platform SmartWhales, which uses AI to identify profitable trading patterns and addresses. Entendre Finance leverages Covalent’s structured data, processed by AI technology for real-time insights, anomaly detection, and predictive analytics.

Currently, the main application scenarios for Covalent’s on-chain data services are predominantly in the financial field. However, as Web3 products and data types continue to diversify, the use cases for on-chain data are expected to expand further.

The circulating market cap of Covalent is $150 million, with a fully diluted market cap of $235 million, offering a noticeable valuation advantage compared to The Graph, a leading project in the blockchain data indexing sector.

3. Hivemapper ($Honey)

Among all data types, video data typically commands the highest price. Hivemapper can provide AI companies with both video and map information. Hivemapper is a decentralized global mapping project that aims to create a detailed, dynamic, and accessible map system through blockchain technology and community contributions. Participants capture map data using dashcams and add it to the open-source Hivemapper data network, earning $HONEY tokens as rewards for their contributions. To enhance network effects and reduce interaction costs, Hivemapper is built on Solana.

Hivemapper was founded in 2015 with the original vision of creating maps using drones. However, this approach proved difficult to scale, leading the company to shift towards using dashcams and smartphones to capture geographic data, thereby reducing the cost of global map creation.

Compared to street view and mapping software like Google Maps, Hivemapper leverages an incentive network and crowdsourcing model to more efficiently expand map coverage, maintain the freshness of real-world map data, and enhance video quality.

Before the surge in AI demand for data, Hivemapper’s main customers included the autonomous driving departments of automotive companies, navigation service providers, governments, insurance companies, and real estate firms. Today, Hivemapper can provide extensive road and environmental data to AI and large models through APIs. By continuously updating image and road feature data streams, AI and ML models will be better equipped to translate this data into enhanced capabilities, enabling them to perform tasks related to geographic location and visual judgment more effectively.

Currently, the circulating market cap of $Honey, the native token of Hivemapper, is $120 million, with a fully diluted market cap of $496 million.

Besides the aforementioned projects, other notable projects in the data sector include:

1. The Graph ($GRT): With a circulating market cap of $3.2 billion and a fully diluted valuation (FDV) of $3.7 billion, The Graph provides blockchain data indexing services similar to Covalent.

2. Ocean Protocol ($OCEAN): Ocean Protocol has a circulating market cap of $670 million and an FDV of $1.45 billion. The project aims to facilitate the exchange and monetization of data and data-related services through its open-source protocol. Ocean Protocol connects data consumers with data providers, ensuring trust, transparency, and traceability in data sharing. The project is set to merge with Fetch.ai and SingularityNET, with the token converting to $ASI.

The Reappearance of the GPT Moment and the Advent of General Artificial Intelligence

In my view, the “AI sector” in the crypto industry truly began in 2023, the right year ChatGPT shocked the world. The rapid surge of crypto AI projects was largely driven by the “wave of enthusiasm” following the explosive growth of the AI industry.

Despite the continuous upgrades in capabilities with models like GPT-4 and GPT-turbo, and the impressive video creation abilities demonstrated by Sora, as well as the rapid development of large language models beyond OpenAI, it’s undeniable that the technological advancements in AI are causing diminishing cognitive shock to the public. People are gradually adopting AI tools, and large-scale job replacements have yet to materialize.

Will we witness another “GPT moment” in the future, a leap in development that shocks the public and makes them realize that their lives and work will be fundamentally changed?

This moment could be the arrival of general artificial intelligence (AGI).

AGI, or artificial general intelligence, refers to machines that possess human-like general cognitive abilities, capable of solving a wide range of complex problems, rather than being limited to specific tasks. AGI systems have high levels of abstract thinking, extensive background knowledge, comprehensive common-sense reasoning, causal understanding, and cross-disciplinary transfer learning abilities. AGI performs at the level of the best humans across various fields and, in terms of overall capability, completely surpasses even the most outstanding human groups.

In fact, whether depicted in science fiction novels, games, films, or through the public’s expectations following the rapid rise of GPT, society has long anticipated the emergence of AGI that surpasses human cognitive levels. In other words, GPT itself is a precursor to AGI, a harbinger of general artificial intelligence.

The reason GPT has such a profound industrial impact and psychological shock is that its deployment and performance have far exceeded public expectations. People did not anticipate that an AI system capable of passing the Turing test would arrive so quickly and with such impressive capabilities.

In fact, artificial general intelligence (AGI) may once again create a “GPT moment” within the next 1-2 years: just as people are becoming accustomed to using GPT as an assistant, they may soon discover that AI has evolved beyond merely being an assistant. It could independently tackle highly creative and challenging tasks, including solving problems that have stumped top human scientists for decades.

On April 8th of this year, Elon Musk was interviewed by Nicolai Tangen, the Chief Investment Officer of Norway’s sovereign wealth fund, and he discussed the timeline for the emergence of AGI.

Musk stated, “If we define AGI as being smarter than the smartest humans, I think it is very likely to appear by 2025.”

According to Elon Musk’s prediction, it would take at most another year and a half for AGI to arrive. However, he added a condition: “provided that electricity and hardware can keep up.”

The benefits of AGI’s arrival are obvious.

It means that human productivity will make a significant leap forward, and many scientific problems that have stumped us for decades will be resolved. If we define “the smartest humans” as Nobel Prize winners, it means that, provided we have enough energy, computing power, and data, we could have countless tireless “Nobel laureates” working around the clock to tackle the most challenging scientific problems.

However, Nobel Prize winners are not as rare as one in hundreds of millions. Their abilities and intellect are often at the level of top university professors. However, due to probability and luck, they chose the right direction, persisted, and achieved results. Many of their equally capable peers might have won Nobel Prizes in a parallel universe of scientific research. Unfortunately, there are still not enough top university professors involved in scientific breakthroughs, so the speed of “exploring all the correct directions in scientific research” remains very slow.

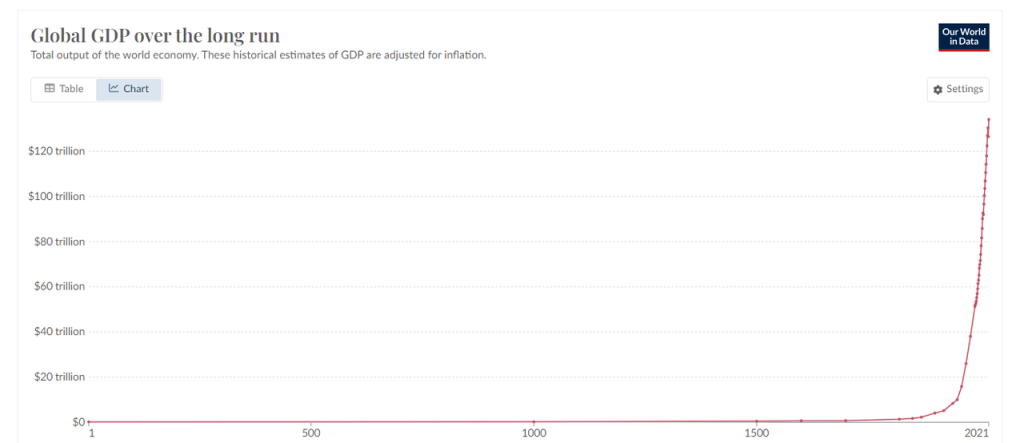

With AGI, and given sufficient energy and computing power, we could have an unlimited number of “Nobel laureate-level” AGIs conducting in-depth exploration in any potential direction for scientific breakthroughs. The speed of technological advancement would increase exponentially. This acceleration would lead to a hundredfold increase in resources we currently consider expensive and scarce over the next 10 to 20 years, such as food production, new materials, medicines, and high-quality education. The cost of acquiring these resources would decrease dramatically. We would be able to support a larger population with fewer resources, and per capita wealth would increase rapidly.

This might sound somewhat sensational, so let’s consider two examples. These examples were also used in my previous research report on IO.NET:

- In 2018, Nobel Laureate in Chemistry, Frances Arnold, said during her award ceremony, “Today we can for all practical purposes read, write, and edit any sequence of DNA, but we cannot compose it. ” Fast forward five years to 2023, a team of researchers from Stanford University and Salesforce Research, an AI-focused startup, made a publication in “Nature Biotechnology.” Utilizing a large language model refined from GPT-3, they generated an entirely new catalog of 1 million proteins. Among these, they discovered two proteins with distinct structures, both endowed with antibacterial function, potentially paving the way for new bacterial resistance strategies beyond traditional antibiotics. This signifies a monumental leap in overcoming the hurdles of protein creation with AI’s assistance.

- Before this, the artificial intelligence algorithm AlphaFold predicted the structures of nearly all 2.14 billion protein types on Earth within 18 months—a milestone that amplifies the achievements of structural biologists throughout history by several magnitudes.

The transformation is on the way, and the arrival of AGI will further accelerate this process.

However, the arrival of AGI also presents enormous challenges.

AGI will not only replace a large number of knowledge workers, but also those in physical service industries, which are currently considered to be “less impacted by AI.” As robotic technology matures and new materials lower production costs, the proportion of jobs replaced by machines and software will rapidly increase.

When this happens, two issues that once seemed very distant will quickly surface:

- The employment and income challenges of a large unemployed population

- How to distinguish between AI and humans in a world where AI is ubiquitous

Worldcoin and Worldchain is attempting to provide solutions by implementing a universal basic income (UBI) system to ensure basic income for the public, and using iris-based biometrics to distinguish between humans and AI.

In fact, UBI is not just a theoretical concept; it has been tested in real-world practice. Countries such as Finland and England have conducted UBI experiments, while political parties in Canada, Spain, and India are actively proposing and promoting similar initiatives.

The advantage of using a biometric identification and blockchain model for UBI distribution lies in its global reach, providing broader coverage of the population. Furthermore, the user network expanded through income distribution can support other business models, such as financial services (DeFi), social networking, and task crowdsourcing, creating synergy within the network’s commercial ecosystem.

One of the notable projects addressing the impact of AGI’s arrival is Worldcoin ($WLD), with a circulating market cap of $1.03 billion and a fully diluted market cap of $47.2 billion.

Risks and Uncertainties on Crypto AI Narratives

Unlike many research reports previously released by Mint Ventures, this article contains a significant degree of subjectivity in its narrative forecasting and predictions. Readers should view the content of this article as a speculative discussion rather than a forecast of the future. The narrative forecasts mentioned above face numerous uncertainties that could lead to incorrect assumptions. These risks or influencing factors include but are not limited to:

Energy Risk: Rapid Decrease in Energy Consumption Due to GPU Upgrades

Despite the surging energy demand for AI, chip manufacturers like NVIDIA are continually upgrading their hardware to deliver higher computing power with lower energy consumption. For instance, in March 2024, NVIDIA released the new generation AI computing card GB200, which integrates two B200 GPUs and one Grace CPU. Its training performance is four times that of the previous mainstream AI GPU H100, and its inference performance is seven times that of the H100, while requiring only one-quarter of the energy consumption of the H100. Nonetheless, the appetite for AI-driven power continues to grow. With the decrease in unit energy consumption and the further expansion of AI application scenarios and demand, the total energy consumption might actually increase.

Data Risk: Project Q* and “Self-Generated Data”

There is a rumored project within OpenAI known as “Q*,” mentioned in internal communications to employees. According to Reuters, citing insiders at OpenAI, this could represent a significant breakthrough on OpenAI’s path to achieving superintelligence or artificial general intelligence (AGI). Q* is rumored to solve previously unseen mathematical problems through abstraction and generate its own data for training large models, without needing real-world data input. If this rumor is true, the bottleneck of large AI model training being constrained by the lack of high-quality data would be eliminated.

AGI Arrival: OpenAI’s Concerns

Whether AGI will truly arrive by 2025, as Musk predicts, remains uncertain, but it is only a matter of time. Worldcoin, as a direct beneficiary of the AGI narrative, faces its biggest concern from OpenAI, given that it is widely regarded as the “shadow token of OpenAI.”

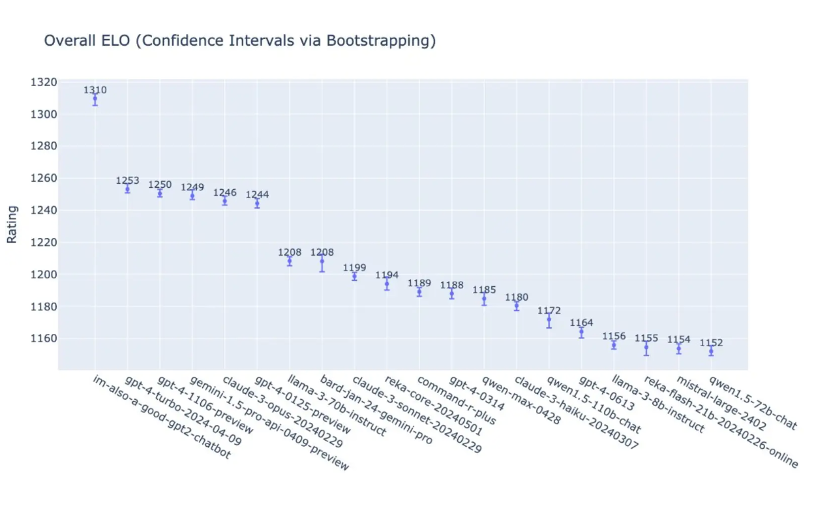

In the early hours of May 14, OpenAI presented the latest performance of GPT-4o and 19 other versions of large language models in comprehensive task scores at their Spring New Product Launch. According to the table, GPT-4o scored 1310, which visually appears significantly higher than the others. However, in terms of total score, it is only 4.5% higher than the second-place GPT-4 turbo, 4.9% higher than Google’s Gemini 1.5 Pro in fourth place, and 5.1% higher than Anthropic’s Claude3 Opus in fifth place.

Since GPT-3.5 first stunned the world, only a little over a year has passed, and OpenAI’s competitors have already closed the gap significantly (despite GPT-5 not yet being released, which is expected to happen this year). The question of whether OpenAI can maintain its industry-leading position in the future is becoming increasingly uncertain. If OpenAI’s leading advantage and dominance are diluted or even surpassed, then the narrative value of Worldcoin as OpenAI’s shadow token will also diminish.

In addition to Worldcoin’s iris authentication solution, more and more competitors are entering the market. For instance, the palm scan ID project Humanity Protocol has recently completed a new funding round, raising $30 million at a $1 billion valuation. LayerZero Labs has also announced that it will operate on Humanity and join its validator node network, using ZK proofs to authenticate credentials.

Conclusion

In conclusion, while I have extrapolated potential future narratives for the crypto AI sector, it is important to recognize that it differs from native crypto sectors like DeFi. It is largely a product of the AI hype spilling over into the crypto world. Many of the current projects have not yet proven their business models, and many projects are more like AI-themed memes (e.g., $RNDR resembles an NVIDIA meme, Worldcoin resembles an OpenAI meme). Readers should approach this cautiously.