Contents

Introduction

In our last report, we mentioned that compared to the previous two cycles, the current cryptocurrency bull run is missing the new business models and asset narratives. Artificial Intelligence (AI) is one of the novel narratives in the Web3 space this cycle. This article delves into the hot AI project of the year, IO.NET, and organizes thoughts on the following two questions:

- The necessity of AI+Web3 in the commercial landscape

- The necessity and challenges of deploying a decentralized computing network

I will organize key information about the representative project in the decentralized AI computing network: IO.NET, including product design, competitive landscape, and project background. I will also speculate on the project’s valuation metrics.

The insights on The Business Logic Behind The Convergence of AI and Web3 part draw inspiration from “The Real Merge” by Michael Rinko, a research analyst at Delphi Delphi. This analysis assimilates and references ideas from his work, highly recommended for further reading The Real Merge.

Please note that this article reflects my current thinking and may evolve. The opinions here are subjective and there may be errors in facts, data, and logical reasoning. This is not financial advice, but feedback and discussions are welcomed.

The Business Logic Behind The Convergence of AI and Web3

2023: The “Annus Mirabilis” for AI

Reflecting on the annals of human development, it’s clear that technological breakthroughs catalyze profound transformations – from daily life to the industrial landscapes and the march of civilization itself.

In human history, there are two significant years, namely 1666 and 1905, which are now celebrated as the “Annus Mirabilis” in the history of science.

The year 1666 earned its title due to Isaac Newton’s cascade of scientific breakthroughs. In a single year, he pioneered the branch of physics known as optics, founded the mathematical discipline of calculus, and derived the law of gravitation, which is a foundational law of modern natural science. Any one of these contributions was foundational to the scientific development of humanity over the next century, significantly accelerating the overall progress of science.

The other landmark year is 1905, when a mere 26-year-old Einstein published four papers in quick succession in “Annalen der Physik,” covering the photoelectric effect, setting the stage for quantum mechanics; Brownian motion, providing a pivotal framework for stochastic process analysis; the theory of special relativity; and the mass-energy equivalence, encapsulated in the equation E=MC^2. Looking back, each of these papers is considered to surpass the average level of Nobel Prize-winning work in physics—a distinction Einstein himself received for his work on the photoelectric effect. These contributions collectively propelled humanity several strides forward in the journey of civilization.

The year 2023, recently behind us, is poised to be celebrated as another “Miracle Year,” thanks in large part to the emergence of ChatGPT.

Viewing 2023 as a “Miracle Year” in human technology history isn’t just about acknowledging the strides made in natural language processing and generation by ChatGPT. It’s also about recognizing a clear pattern in the advancement of large language models—the realization that by expanding model parameters and training datasets, we can achieve exponential enhancements in model performance. Moreover, it seems boundless in the short term, assuming computing power keeps pace.

This capability extends far beyond language comprehension and conversation generation; it can be widely applied across various scientific fields. Taking the application of large language models in the biological sector as an example:

- In 2018, Nobel Laureate in Chemistry, Frances Arnold, said during her award ceremony, “Today we can for all practical purposes read, write, and edit any sequence of DNA, but we cannot compose it. ” Fast forward five years to 2023, a team of researchers from Stanford University and Salesforce Research, an AI-focused startup, made a publication in “Nature Biotechnology.” Utilizing a large language model refined from GPT-3, they generated an entirely new catalog of 1 million proteins. Among these, they discovered two proteins with distinct structures, both endowed with antibacterial function, potentially paving the way for new bacterial resistance strategies beyond traditional antibiotics. This signifies a monumental leap in overcoming the hurdles of protein creation with AI’s assistance.

- Before this, the artificial intelligence algorithm AlphaFold predicted the structures of nearly all 2.14 billion protein types on Earth within 18 months—a milestone that amplifies the achievements of structural biologists throughout history by several magnitudes.

The integration of AI models promises to transform industries drastically. From the hard-tech realms of biotech, material science, and drug discovery to the cultural spheres of law and the arts, a transformative wave is set to reshape these fields, with 2023 marking the beginning of it all.

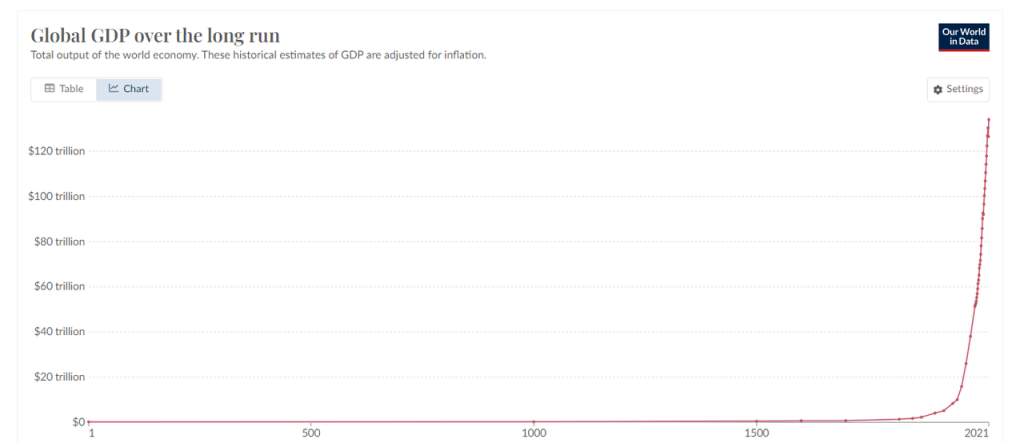

It’s widely acknowledged that the past century has witnessed an exponential rise in humanity’s ability to generate wealth. The swift advancement of AI technologies is expected to accelerate this process.

Merging AI and Crypto

To grasp the inherent need for the fusion of AI and crypto, it’s insightful to look at how their distinct features complement each other.

The Symbiosis of AI and Crypto Features

AI is distinguished by three main qualities:

- Stochasticity: AI is stochastic, with its content production mechanism being a difficult-to-replicate, enigmatic black box, making its outputs inherently stochastic.

- Resource Intensive: AI is a resource-intensive industry, requiring significant amounts of energy, chips, and computing power.

- Human-like Intelligence: AI is (soon to be) capable of passing the Turing test, making it increasingly difficult to distinguish between humans and AI.

※ On October 30, 2023, researchers from the University of California, San Diego, unveiled the Turing test scores for GPT-3.5 and GPT-4.0. The latter achieved a score of 41%, narrowly missing the pass mark of 50% by just 9 percentage points, with humans scoring 63% on the same test. The essence of this Turing test lies in how many participants perceive their chat partner to be human. A score above 50% indicates that a majority believes they are interacting with a human, not a machine, thereby deeming the AI to have successfully passed the Turing test as at least half of the people could not distinguish it from a human.

As AI paves the way for groundbreaking advancements in human productivity, it simultaneously introduces profound challenges to our society, specifically:

- How to verify and control the stochasticity of AI, turning it into an advantage rather than a flaw

- How to bridge the vast requirements for energy and computing power that AI demands

- How to distinguish between humans and AI

Crypto and blockchain technology could offer the ideal solution to the challenges posed by AI, characterized by three key attributes:

- Determinism: Operations are based on blockchain, code, and smart contracts, with clear rules and boundaries. Inputs lead to predictable outputs, ensuring a high level of determinism.

- Efficient Resource Allocation: The crypto economy has fostered a vast, global, and free market, enabling swift pricing, fundraising, and transfer of resources. The presence of tokens further accelerates market supply and demand alignment, rapidly achieving critical mass through incentivization.

- Trustlessness: With public ledgers and open-source code, anyone can easily verify operations, creating a “trustless” system. Furthermore, Zero-Knowledge (ZK) technology further ensures that privacy is maintained during these verification processes.

To demonstrate the complementarity between AI and the crypto economy, let’s delve into three examples.

Example A: Overcoming Stochasticity with AI Agents Powered by the Crypto Economy

AI Agents are intelligent programs designed to perform tasks on behalf of humans according to their directives, with Fetch.AI being a notable example in this domain. Imagine we task our AI agent with executing a financial operation, such as “investing $1000 in BTC.” The AI agent could face two distinct scenarios:

Scenario 1: The agent is required to interact with traditional financial entities (e.g., BlackRock) to buy BTC ETFs, encountering many compatibility issues with centralized organizations, including KYC procedures, document verification, login processes, and identity authentication, all of which are notably burdensome at present.

Scenario 2: When operating within the native crypto economy, the process becomes simplified. The agent could directly carry out the transaction through Uniswap or a similar trading aggregator, employing your account to sign in and confirm the order, and consequently acquiring WBTC or other variants of wrapped BTC. This procedure is efficient and streamlined. Essentially, this is the function currently served by various Trading Bots, acting as basic AI agents with a focus on trading activities. With further development and integration of AI, these bots will fulfill more intricate trading objectives. For instance, they might monitor 100 smart money addresses on the blockchain, assess their trading strategies and success rates, allocate 10% of their funds to copy their trades over a week, halt operations if the returns are unfavorable, and deduce the potential reasons for these strategies.

AI thrives within blockchain systems, fundamentally because the rules of the crypto economy are explicitly defined, and the system allows for permissionlessness. Operating under clear guidelines significantly reduces the risks tied to AI’s inherent stochasticity. For example, AI’s dominance over humans in chess and video games stems from the fact that these environments are closed sandboxes with straightforward rules. Conversely, advancements in autonomous driving have been more gradual. The open-world challenges are more complex, and our tolerance for AI’s unpredictable problem-solving in such scenarios is markedly lower.

Example B: Resource Consolidation via Token Incentives

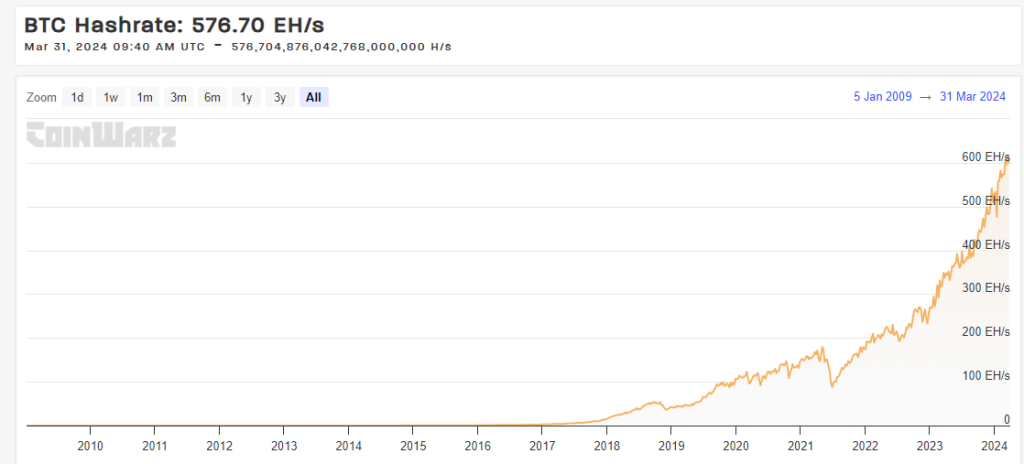

The formidable global hash network backing BTC, boasting a current total hash rate of 576.70 EH/s, outstrips the cumulative computing power might of any country’s supercomputers. This growth is propelled by simple and fair incentives within the network.

Moreover, DePIN projects like Mobile, are exploring token incentives to cultivate a market on both the supply side and demand side to foster network effects. The forthcoming focus of this article, IO.NET, is a platform designed to aggregate AI computing power, hoping to unlock the latent potential of AI computing power through a token model.

Example C: Leveraging Open Source and ZK Proof to Differentiate Humans from AI While Protecting Privacy

Worldcoin, a Web3 project co-founded by OpenAI’s Sam Altman, employs a novel approach to identity verification. Utilizing a hardware device known as Orb, it leverages human iris biometrics to produce unique and anonymous hash values via Zero-Knowledge (ZK) technology, differentiating humans from AI. In early March 2024, the Web3 art project Drip started to implement Worldcoin ID to authenticate real humans and allocate rewards.

Worldcoin has recently open-sourced its iris hardware, Orb, ensuring the security and privacy of biometric data.

Overall, due to the determinism of code and cryptography, the resource circulation and fundraising advantages brought by permissionless and token-based mechanisms, alongside the trustless nature based on open-source code and public ledgers, the crypto economy has become a significant potential solution for the challenges that human society faces with AI.

The most immediate and commercially demanding challenge is the extreme thirst for computational resources required by AI products, primarily driven by a substantial need for chips and computing power.

This is also the main reason why distributed computing power projects have led the gains during this bull market cycle in the overall AI sector.

The Business Imperative for Decentralized Computing

AI requires substantial computational resources, necessary for both model training and inference tasks.

It has been well-documented in the training of large language models that once the scale of data parameters is substantial, these models begin to exhibit unprecedented capabilities. The exponential improvements seen from one ChatGPT generation to the next are driven by an exponential growth in computational demands for model training.

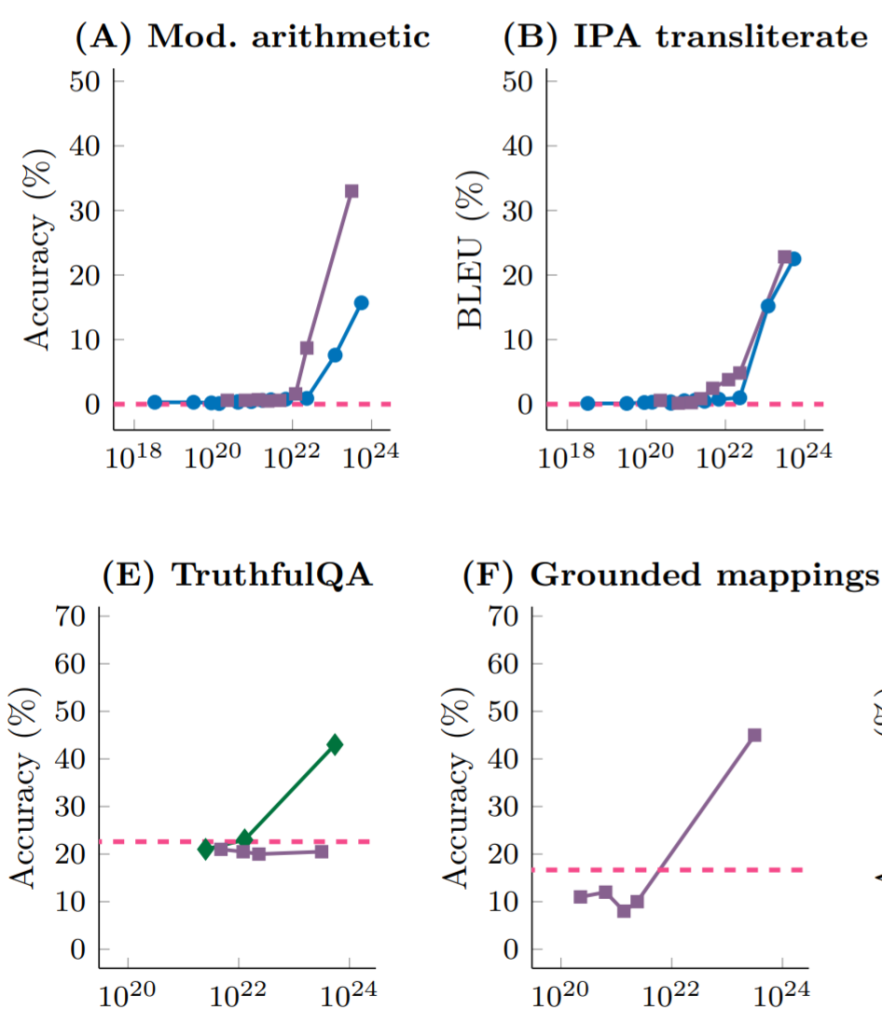

Research from DeepMind and Stanford University indicates that across various large language models, when handling different tasks—be it computation, Persian question answering, or natural language understanding—the models only approximate random guessing unless the training involves significantly scaled-up model parameters (and by extension, computational loads). Any task’s performance remains nearly random until computational efforts reach 10^22 FLOPs. Beyond this critical threshold, task performance improves dramatically across any language model.

*FLOPs refer to floating-point operations per second, a measure of computing performance.

The principle of “achieving miracles with great effort” in computing power, both in theory and verified in practice, inspired OpenAI’s founder, Sam Altman, to propose an ambitious plan to raise $7 trillion. This fund is intended to establish a chip factory that would exceed the current capabilities of TSMC by tenfold (estimated to cost $1.5 trillion), with the remaining funds allocated for chip production and model training.

In addition to the computational demands of training AI models, the inference processes also require considerable computing power, albeit less than training. This ongoing need for chips and computational resources has become a standard reality for players in the AI field.

In contrast to centralized AI computing providers like Amazon Web Services, Google Cloud Platform, and Microsoft’s Azure, decentralized AI computing offers several compelling value propositions:

- Accessibility: Gaining access to computing chips through services like AWS, GCP, or Azure typically requires weeks, and the most popular GPU models are frequently out of stock. Additionally, consumers are usually bound by lengthy, rigid contracts with these large corporations. Distributed computing platforms, on the other hand, provide flexible hardware options with enhanced accessibility.

- Cost Efficiency: By leveraging idle chips and incorporating token subsidies from network protocols for chip and computing power providers, decentralized computing networks can offer computing power at reduced costs.

- Censorship Resistance: The supply of cutting-edge chips is currently dominated by major technology companies, and with the United States government ramping up scrutiny of AI computing services, the ability to obtain computing power in a decentralized, flexible, and unrestricted manner is increasingly becoming a clear necessity. This is a core value proposition of web3-based computing platforms.

If fossil fuels were the lifeblood of the Industrial Age, then computing power may well be the lifeblood of the new digital era ushered in by AI, making the supply of computing power an infrastructure for the AI age. Similarly to how stablecoins have emerged as a vigorous derivative of fiat currency in the Web3 epoch, might the distributed computing market evolve into a burgeoning segment within the fast-expanding AI computing market?

This is still an emerging market, and much remains to be seen. However, several factors could potentially drive the narrative or market adoption of decentralized computing:

- Persistent GPU Supply Challenges: The ongoing supply constraints for GPUs might encourage developers to explore decentralized computing platforms.

- Regulatory Expansion: Accessing AI computing services from major cloud platforms involves thorough KYC processes and scrutiny. This could lead to greater adoption of decentralized computing platforms, particularly in areas facing restrictions or sanctions.

- Token Price Incentives: Increases in token prices during bull markets could enhance the value of subsidies offered to GPU providers by platforms, attracting more vendors to the market, increasing its scale, and lowering costs for consumers.

At the same time, the challenges faced by decentralized computing platforms are also quite evident:

- Technical and Engineering Challenges

- Proof of Work Issues: The computations in deep learning models, due to the hierarchical structure where the output of each layer is used as the input for the next, verifying the validity of computations requires executing all prior work, which is neither simple nor efficient. To tackle this, decentralized computing platforms need to either develop new algorithms or employ approximate verification techniques that offer probabilistic assurance of results, rather than absolute determinism.

- Parallelization Challenges: Decentralized computing platforms draw upon a diverse array of chip suppliers, each typically offering limited computing power. Completing the training or inference tasks of an AI model by a single chip supplier quickly is nearly impossible. Therefore, tasks must be decomposed and distributed using parallelization to shorten the overall completion time. This approach, however, introduces several complications, including how tasks are broken down (particularly complex deep learning tasks), data dependencies, and the extra connectivity costs between devices.

- Privacy Protection Issues: How can one ensure that the data and models of the client are not disclosed to the recipient of the tasks?

- Regulatory Compliance Challenges

- Decentralized computing platforms, due to their permissionless nature in the supply and demand markets, can appeal to certain customers as a key selling point. However, as AI regulatory frameworks evolve, these platforms may increasingly become targets of governmental scrutiny. Moreover, some GPU vendors are concerned about whether their leased computing resources are being used by sanctioned businesses or individuals.

In summary, the primary users of decentralized computing platforms are mostly professional developers or small to medium-sized enterprises. Unlike cryptocurrency and NFT investors, these clients prioritize the stability and continuity of the services provided by the platforms, and pricing is not necessarily their foremost concern. Decentralized computing platforms have a long journey to go before they can win widespread acceptance from this discerning user base.

Next, we will delve into the details and perform an analysis of IO.NET, a new decentralized computing power project in this cycle. We will also compare it with similar projects to estimate its potential market valuation after its launch.

Decentralized AI Computing Platform: IO.NET

Project Overview

IO.NET is a decentralized computing network that has established a two-sided market around chips. On the supply side, there are globally distributed computing powers, primarily GPUs, but also CPUs and Apple’s integrated GPUs (iGPUs). The demand side consists of AI engineers seeking to complete AI model training or inference tasks.

The official IO.NET website states their vision:

Our Mission

Putting together one million GPUs in a DePIN – decentralized physical infrastructure network.

Compared to traditional cloud AI computing services, this platform highlights several key advantages:

- Flexible Configuration: AI engineers have the freedom to select and assemble the necessary chips into a “cluster” tailored to their specific computing tasks.

- Rapid Deployment: Unlike the lengthy approval and wait times associated with centralized providers such as AWS, deployment on this platform can be completed in just seconds, allowing for immediate task commencement.

- Cost Efficiency: The service costs are up to 90% lower than those offered by mainstream providers.

Furthermore, IO.NET plans to launch additional services in the future, such as an AI model store.

Product Mechanism and Business Metrics

Product Mechanisms and Deployment Experience

Similar to major platforms like Amazon Cloud, Google Cloud, and Alibaba Cloud, IO.NET offers a computing service known as IO Cloud. This service operates through a distributed and decentralized network of chips that supports the execution of Python-based machine-learning code for AI and machine-learning applications.

The basic business module of IO Cloud is called Clusters——self-coordinating groups of GPUs designed to efficiently handle computing tasks. AI engineers have the flexibility to customize the clusters to meet their specific needs.

The user interface of IO.NET is highly user-friendly. If you’re looking to deploy your own chip cluster for AI computing tasks, simply navigate to the Clusters page on the platform, where you can effortlessly configure your desired chip cluster according to your requirements.

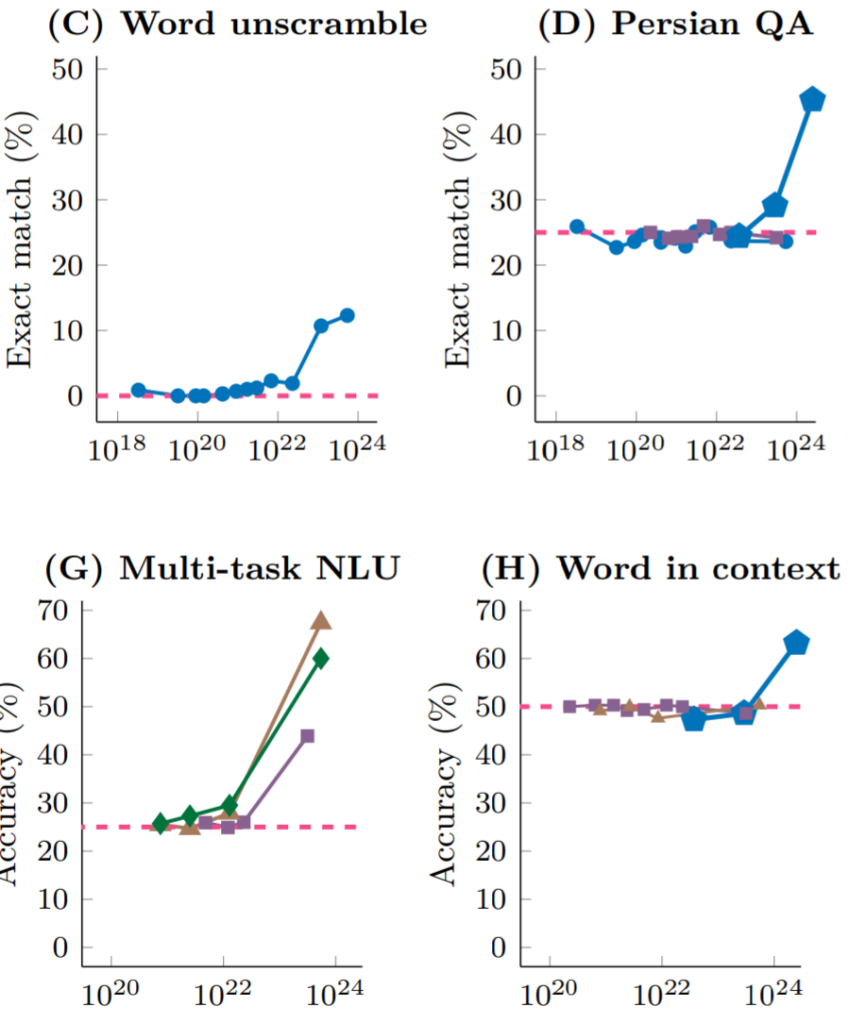

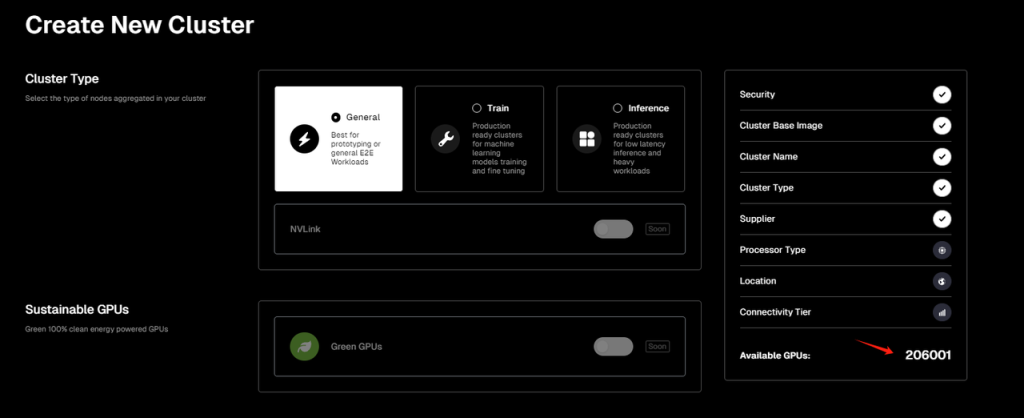

First, you need to select your cluster type, with three options available:

- General: Provides a general environment, suitable for early stages of a project where specific resource requirements are not yet clear.

- Train: A cluster designed specifically for the training and fine-tuning of machine learning models. This option provides additional GPU resources, higher memory capacity, and/or faster network connections to accommodate these intensive computing tasks.

- Inference: A cluster designed for low-latency inference and high-load work. In the context of machine learning, inference refers to using trained models to predict or analyze new datasets and provide feedback. Therefore, this option focuses on optimizing latency and throughput to support real-time or near-real-time data processing needs.

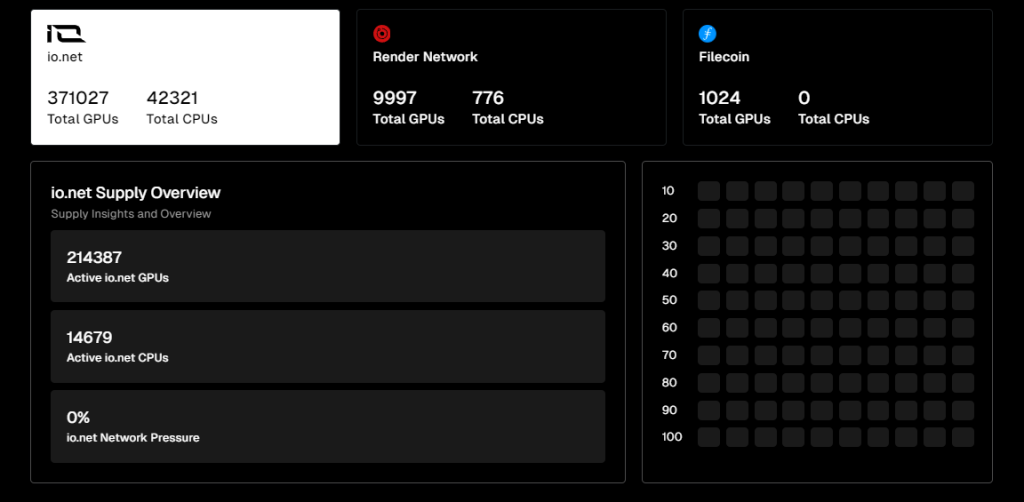

Next, you need to choose a supplier for your cluster. IO.NET has partnerships with Render Network and the Filecoin miner network, allowing users to select chips from IO.NET or other two networks as the supply source for their computing clusters. This effectively positions IO.NET as an aggregator (note: Filecoin services are temporarily offline). It’s worth noting that IO.NET currently has over 200,000 GPUs available online, while Render Network has over 3,700 GPUs available.

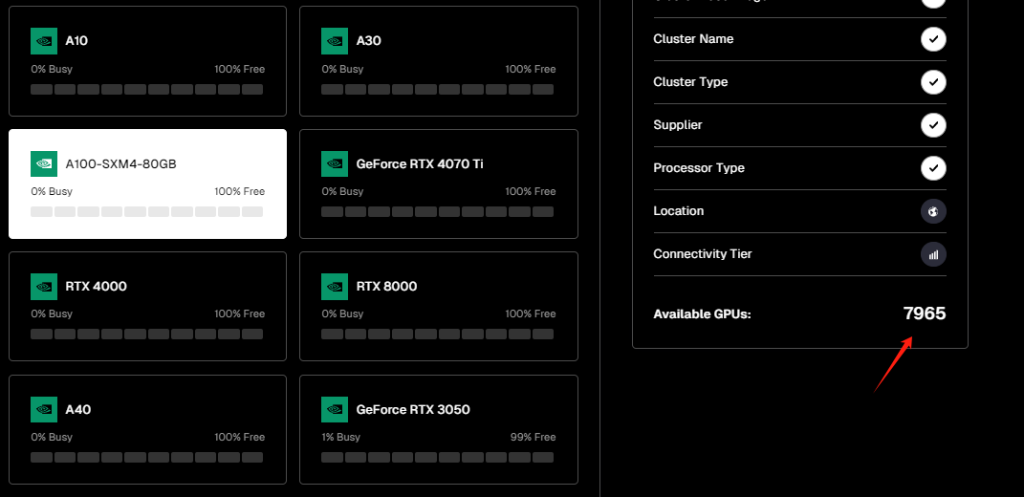

Following this, you’ll proceed to the hardware selection phase of your cluster. Presently, IO.NET lists only GPUs as the available hardware option, excluding CPUs or Apple’s iGPUs (M1, M2, etc.), with the GPUs primarily consisting of NVIDIA products.

Among the officially listed and available GPU hardware options, based on the data tested by me on the day, the total number of online GPUs available within the IO.NET network was 206,001. The GPU with the highest availability was the GeForce RTX 4090, with 45,250 units, followed by the GeForce RTX 3090 Ti, with 30,779 units.

Furthermore, there are 7,965 units of the highly efficient A100-SXM4-80GB chip (each priced above $15,000) available online, which is more efficient for AI computing tasks such as machine learning, deep learning, and scientific computing.

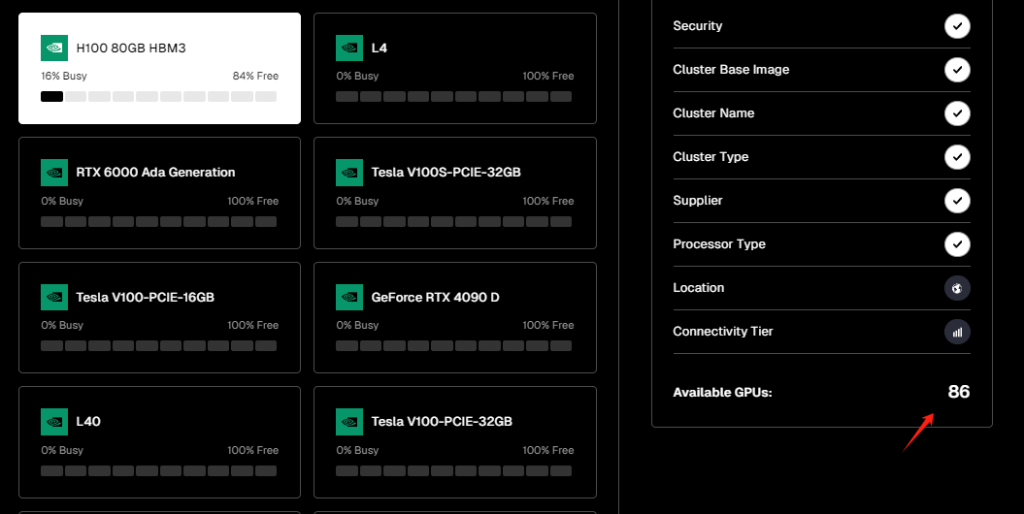

The NVIDIA H100 80GB HBM3, which is designed from the ground up for AI (with a market price of over $40,000), delivers training performance that is 3.3 times greater and inference performance that is 4.5 times higher than the A100. Currently, there are 86 units available online.

Once the hardware type for the cluster has been chosen, users will need to specify further details such as the geographic location of the cluster, connectivity speed, the number of GPUs, and the duration.

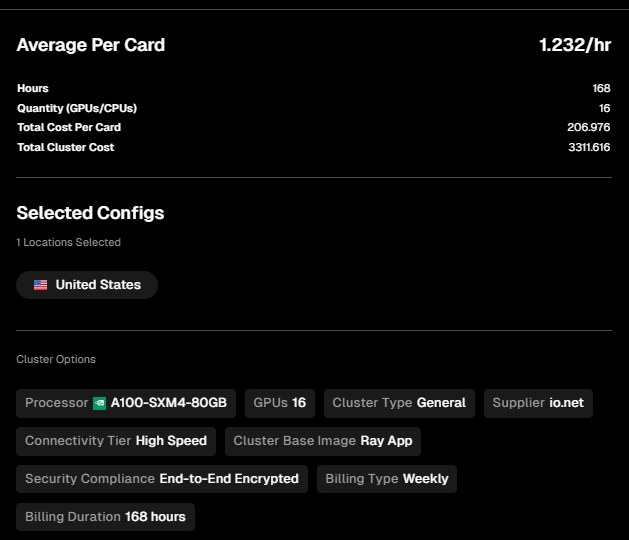

Finally, IO.NET will calculate a detailed bill based on your selected options. As an illustration, consider the following cluster configuration:

- Cluster Type: General

- 16 A100-SXM4-80GB GPUs

- Connectivity tier: High Speed

- Geographic location: United States

- Duration: 1 week

The total bill for this configuration is $3311.6, with the hourly rental price per card being $1.232.

The hourly rental price for a single A100-SXM4-80GB on Amazon Web Services, Google Cloud, and Microsoft Azure is $5.12, $5.07, and $3.67 respectively (data sourced from Cloud GPU Comparison, actual prices may vary depending on contract details).

Consequently, when it comes to cost, IO.NET offers chip computing power at prices much lower than those of mainstream providers. Additionally, the flexibility in supply and procurement options makes IO.NET an attractive choice for many users.

Business Overview

Supply Side

As of April 4th, 2024, official figures reveal that IO.NET had a total GPU supply of 371,027 units and a CPU supply of 42,321 units on the supply side. In addition, Render Network, as a partner, had an additional 9,997 GPUs and 776 CPUs connected to the network’s supply.

At the time of writing, 214,387 of the GPUs integrated with IO.NET were online, resulting in an online rate of 57.8%. The online rate for GPUs coming from Render Network was 45.1%.

What does this data on the supply side imply?

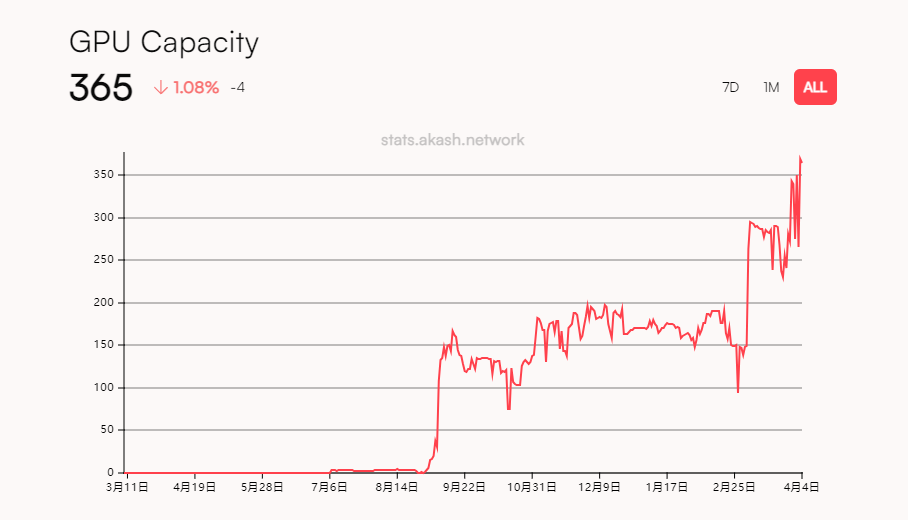

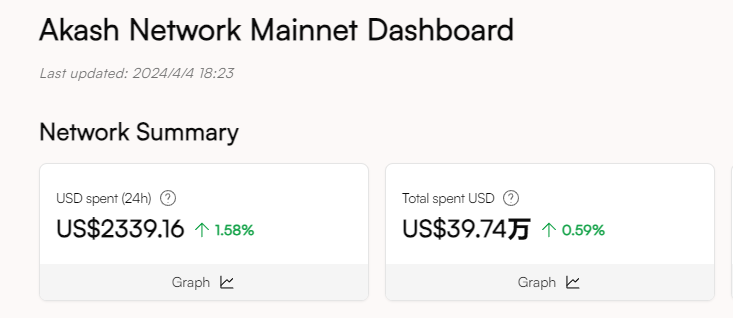

To provide a benchmark, let’s bring in Akash Network, a more seasoned decentralized computing project.

Akash Network launched its mainnet as early as 2020, initially focusing on decentralized services for CPUs and storage. It rolled out a testnet for GPU services in June 2023 and subsequently launched the mainnet for decentralized GPU computing power in September of the same year.

According to official data from Akash, even though the supply side has been growing continuously since the launch of its GPU network, the total number of GPUs connected to the network remains only 365.

When evaluating the volume of GPU supply, IO.NET vastly exceeds Akash Network, operating on a dramatically larger scale. IO.NET has established itself as the largest supply side in the decentralized GPU computing power sector.

Demand Side

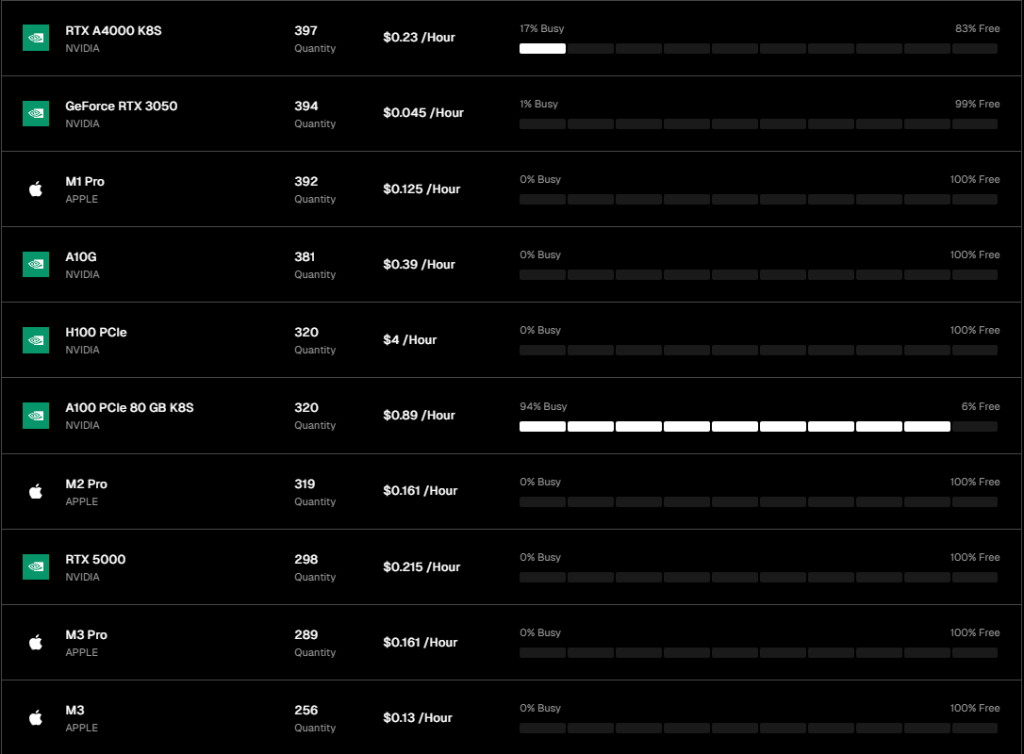

From the demand side, IO.NET is still in the early stages of market cultivation, with a relatively small total volume of computation tasks being executed on its network. The majority of GPUs are online but idle, showing a workload percentage of 0%. Only four chip types—the A100 PCIe 80GB K8S, RTX A6000 K8S, RTX A4000 K8S, and H100 80GB HBM3—are actively engaged in processing tasks, and among these, only the A100 PCIe 80GB K8S is experiencing a workload above 20%.

The network’s official stress level reported for the day stood at 0%, indicating that a significant portion of the GPU supply is currently in an online but idle state.

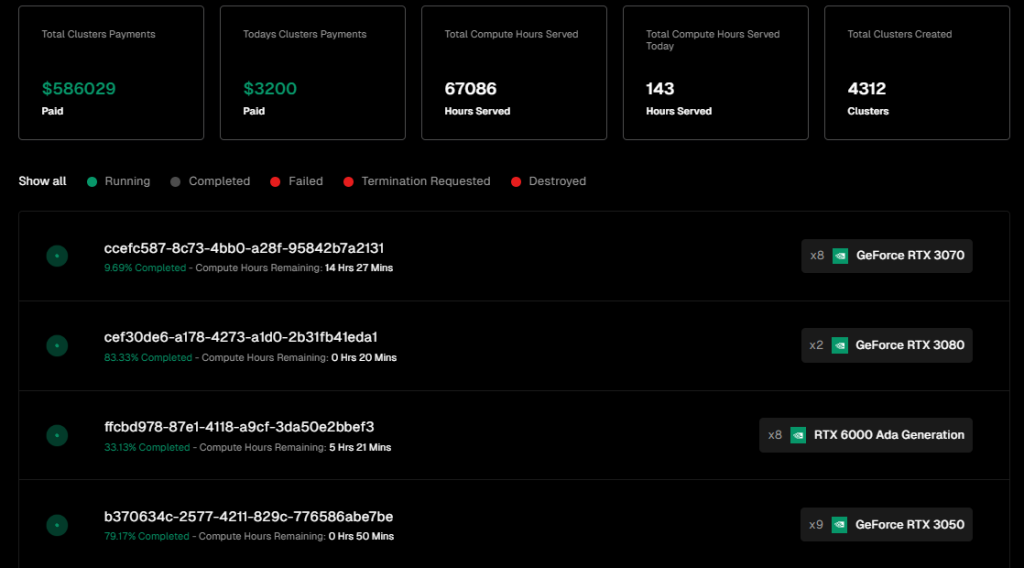

Financially, IO.NET has accrued $586,029 in service fees to date, with $3,200 of that total generated on the most recent day.

The financials concerning network settlement fees, both in terms of total and daily transaction volumes, align closely with those of Akash. However, it’s important to note that the bulk of Akash’s revenue is derived from its CPU offerings, with an inventory exceeding 20,000 CPUs.

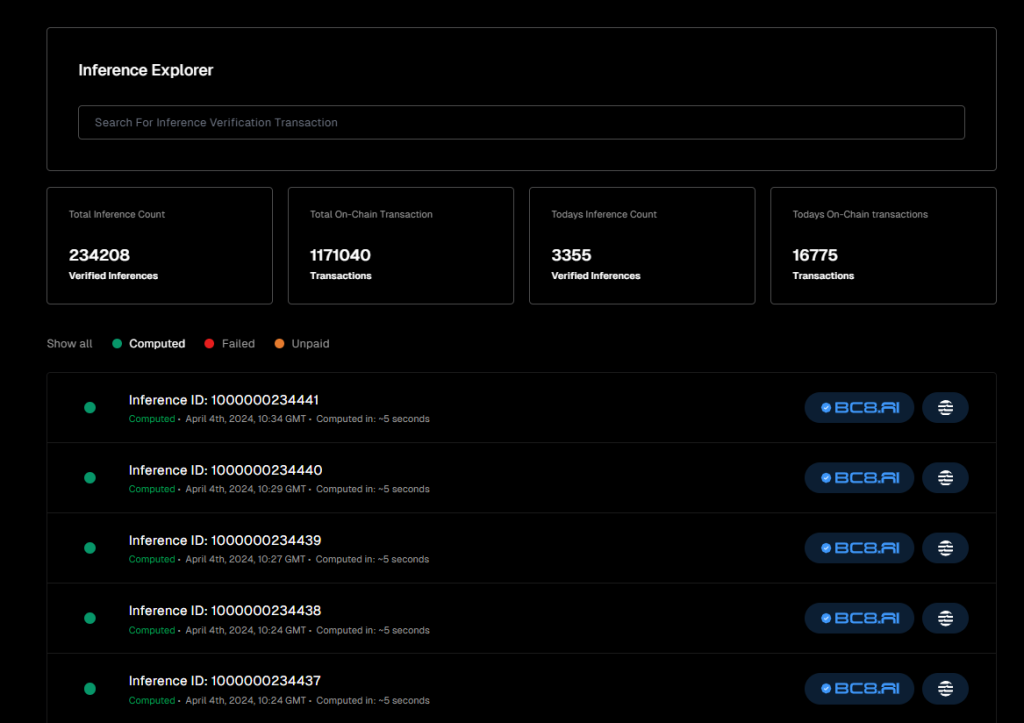

Additionally, IO.NET has disclosed detailed data for AI inference tasks processed by the network. As of the latest report, the platform has successfully processed and validated over 230,000 inference tasks, though most of this volume stems from BC8.AI, a project sponsored by IO.NET.

IO.NET’s supply side is expanding efficiently, driven by expectations surrounding an airdrop and a community event known as “Ignition.” This initiative has rapidly attracted a significant amount of AI computing power. On the demand side, however, expansion remains nascent with insufficient organic demand. The reasons behind this sluggish demand—whether due to uninitiated consumer outreach efforts or unstable service experiences leading to limited large-scale adoption—require further evaluation.

Given the challenges in quickly closing the gap in AI computing capabilities, many AI engineers and projects are exploring alternatives, potentially increasing interest in decentralized service providers. Moreover, IO.NET has not yet implemented economic incentives or activities to boost demand, and as the product experience continues to improve, the anticipated equilibrium between supply and demand holds promise for the future.

Team Background and Fundraising Overview

Team Profile

The core team of IO.NET initially focused on quantitative trading. Up until June 2022, they were engaged in creating institutional-level quantitative trading systems for equities and cryptocurrencies. Driven by the system backend’s demand for computing power, the team began exploring the potential of decentralized computing and ultimately focused on the specific issue of reducing the cost of GPU computing services.

Founder & CEO: Ahmad Shadid

Before founding IO.NET, Ahmad Shadid had worked in quantitative finance and financial engineering, and he is also a volunteer at the Ethereum Foundation.

CMO & Chief Strategy Officer: Garrison Yang

Garrison Yang officially joined IO.NET in March 2024. Before that, he was the VP of Strategy and Growth at Avalanche and is an alumnus of the University of California, Santa Barbara.

COO: Tory Green

Tory Green serves as the Chief Operating Officer of IO.NET. He was previously the COO of Hum Capital and the Director of Business Development and Strategy at Fox Mobile Group. He graduated from Stanford University.

IO.NET’s LinkedIn profile indicates that the team is headquartered in New York, USA, with a branch office in San Francisco, and employs over 50 staff members.

Funding Overview

IO.NET has only publicly announced one funding round—a Series A completed in March this year with a valuation of $1 billion, through which they successfully raised $30 million. This round was led by Hack VC, with participation from other investors including Multicoin Capital, Delphi Digital, Foresight Ventures, Animoca Brands, Continue Capital, Solana Ventures, Aptos, LongHash Ventures, OKX Ventures, Amber Group, SevenX Ventures, and ArkStream Capital.

Notably, the investment from the Aptos Foundation might have influenced the BC8.AI project’s decision to switch from using Solana for its settlement and accounting processes to the similarly high-performance Layer 1 blockchain, Aptos.

Valuation Estimation

According to previous statements by founder and CEO Ahmad Shadid, IO.NET is set to launch its token by the end of April 2024.

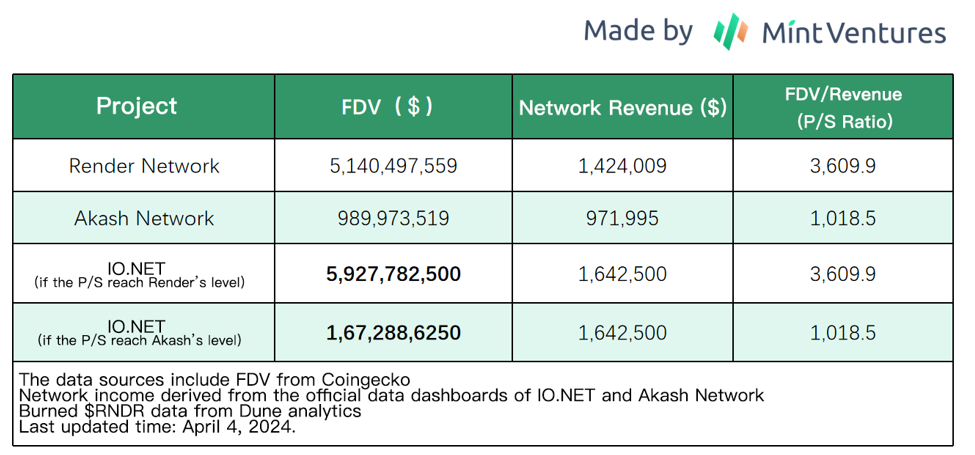

IO.NET has two benchmark projects that serve as references for valuation: Render Network and Akash Network, both of which are representative decentralized computing projects.

There are two principal methods to derive an estimate of IO.NET’s market cap:

- The Price-to-Sales (P/S) ratio, which compares the FDV to the revenue;

- FDV-to-Chip Ratio (M/C Ratio)

We will start by examining the potential valuation using the Price-to-Sales ratio:

Examining the price-to-sales ratio, Akash represents the conservative end of IO.NET’s estimated valuation spectrum, while Render provides a high-end benchmark, positing an FDV ranging from $1.67 billion to $5.93 billion.

However, given the updates to the IO.NET project, its more compelling narrative, coupled with its smaller initial market cap and a broader supply base, suggest its FDV could well surpass that of Render Network.

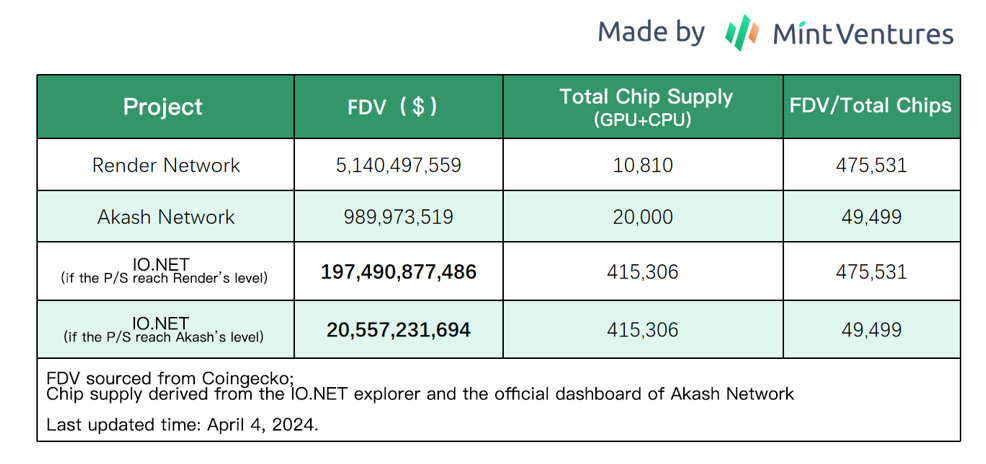

Turning to another valuation comparison perspective, namely the “FDV-to-Chip Ratio”.

In the context of a market where demand for AI computing power exceeds supply, the most crucial element of decentralized AI computing networks is the scale of GPU supply. Therefore, we can use the “FDV-to-Chip Ratio,” which is the ratio of the project’s fully diluted value to the number of chips within the network, to infer the possible valuation range of IO.NET, providing readers with a reference.

Utilizing the market-to-chip ratio to calculate IO.NET’s valuation range places us between $20.6 billion and $197.5 billion, with Render Network setting the upper benchmark and Akash Network the lower.

Enthusiasts of the IO.NET project might see this as a highly optimistic estimation of market cap.

It is important to consider the current vast number of chips online for IO.NET, stimulated by airdrop expectations and incentive activities. The actual online count of the supply after the project officially launches still requires observation.

Overall, valuations derived from the price-to-sales ratio could offer more reliable insights.

IO.NET, built upon Solana and graced with the convergence of AI and DePIN, is on the cusp of its token launch. The anticipation is palpable as we stand by to witness the impact on its market cap post-launch.